Just to clarify.

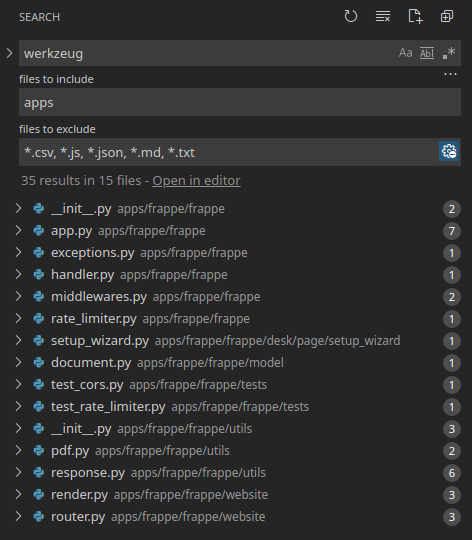

Starlette, the underlying toolkit used by FastAPI is what can replace Werkzeug.

FastAPI seems to be opinionated and Flask like. (Flask uses Werkzeug, FastAPI uses Starlette)

Frappe Framework has its own opinions so starlette.io gives more control, just like Werkzeug.

following app.py (reference from starlette docs), It looks similar to frappe/app.py.

from starlette.applications import Starlette

from starlette.responses import (

PlainTextResponse,

JSONResponse,

)

from starlette.routing import (

Route,

Mount,

WebSocketRoute,

)

from starlette.staticfiles import StaticFiles

def homepage(request):

return PlainTextResponse("Hello, world!")

def user_me(request):

username = "starlette"

return PlainTextResponse("Hello, {}!".format(username))

def user(request):

username = request.path_params["username"]

return PlainTextResponse("Hello, %s!" % username)

async def websocket_endpoint(websocket):

await websocket.accept()

await websocket.send_text("Hello, websocket!")

await websocket.close()

def startup():

print("Ready to go")

async def server_error(request, exc):

print({"exc": exc})

return JSONResponse(

content={"error": exc.detail},

status_code=exc.status_code,

)

exception_handlers = {

404: server_error,

500: server_error,

}

routes = [

Route("/", homepage),

Route("/user/me", user_me),

Route("/user/{username}", user),

WebSocketRoute("/ws", websocket_endpoint),

Mount("/static", StaticFiles(directory="static")),

]

application = Starlette(

debug=True,

routes=routes,

on_startup=[startup],

exception_handlers=exception_handlers,

)

in app.py file we have application used by gunicorn/uvicorn.

Edit:

What happens to “from werkzeug.local import Local, release_local” that stores globals? Things are dependent on frappe.local.*

Need to understand starlette and global variables, found this What is the best way to store globally accessible "heavy" objects? · Issue #374 · encode/starlette · GitHub