have you configured appropriate name servers, wildcard CName/AName to point to your loadbalancer?

all are correct but one thing i observed was after ran the job. pod has been created and status was shown running and 1/1 within few seconds it was went to completed state and 0/1. so we’ve added sleep command for checking the logs. or we tried to login to the pod atleast. so i can able to login the pod for now. but still dashboard was not showing.

Attached SS for ur reference. checked ingress-nginx 2 pods are in completed state as well.

Completed is Success. All good.

Error is Failed.

when i check the endpoint url it was throwing 503 error service temporarily unavailable. why ?

check erpnext pods. 7-10 pods be running?

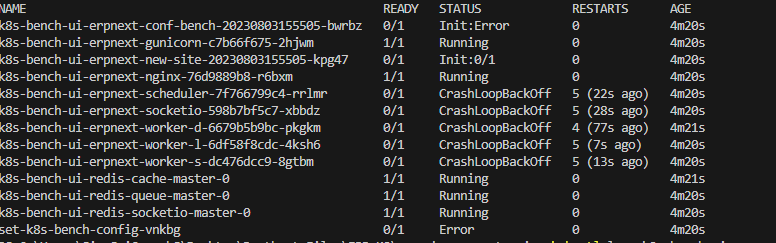

NAME READY STATUS RESTARTS AGE

erpnext-v14-backup-28180800-9lhx6 0/1 Completed 0 32h

erpnext-v14-backup-28181520-nwx2w 0/1 Completed 0 20h

erpnext-v14-backup-28182240-bjgv5 0/1 Completed 0 8h

erpnext-v14-gunicorn-67466895d9-kwmqb 1/1 Running 0 4d23h

erpnext-v14-migrate-20230728150636-lt82z 0/1 Completed 0 4d23h

erpnext-v14-nginx-767c9cd8d9-t5k48 1/1 Running 0 4d23h

erpnext-v14-redis-cache-master-0 1/1 Running 1 (4d23h ago) 6d23h

erpnext-v14-redis-queue-master-0 1/1 Running 0 4d23h

erpnext-v14-redis-socketio-master-0 1/1 Running 0 4d23h

erpnext-v14-scheduler-854bd954d-6lhf4 1/1 Running 0 4d23h

erpnext-v14-socketio-5dd74876c-cqv6v 1/1 Running 0 4d23h

erpnext-v14-worker-d-6bf45dff46-pmkft 1/1 Running 0 4d23h

erpnext-v14-worker-l-7f9668bf48-x6dlx 1/1 Running 0 4d23h

erpnext-v14-worker-s-598b984b54-2xgtm 1/1 Running 0 4d23h

no, i ran the command.

`kubectl -n erpnext apply -f apps/erpnext/configure-kbi-job.yaml’ only one pod has been created. that pod was in completed state.

Note:- using rds database and efs storage driver and redis i’m using local one.

Then you’ve not yet installed the erpnext helm chart as a release?

when i tried to ran this command.

kubectl apply -f .\apps\erpnext\release.yaml

Error from server (BadRequest): error when creating “.\apps\erpnext\release.yaml”: HelmRelease in version “v2beta1” cannot be handled as a HelmRelease: strict decoding error: unknown field “spec.install”, unknown field “spec.interval”, unknown field “spec.values”

so i was removed the “spec.install” “spec.interval” sections kept “spec.values” also failing. paste the error above.

another way i tried:-

According to this article:- Production - K8s Bench Interface

i tried to setup. changed the parameters in values.yaml. all the pods are came. those are in crashloopback state.

The yamls from that repo are fluxcd resources.

You’ll need to take the part under values: from release and make it separate helm values file.

To install helm release, use helm command and not kubectl command

We’ve a long way to go!

What is init container error of conf bench pod?

What is init container error of conf bench pod?:- Attached SS below please refer.

Also as you suggested above:- " take the part under values: from release and make it separate helm values file"

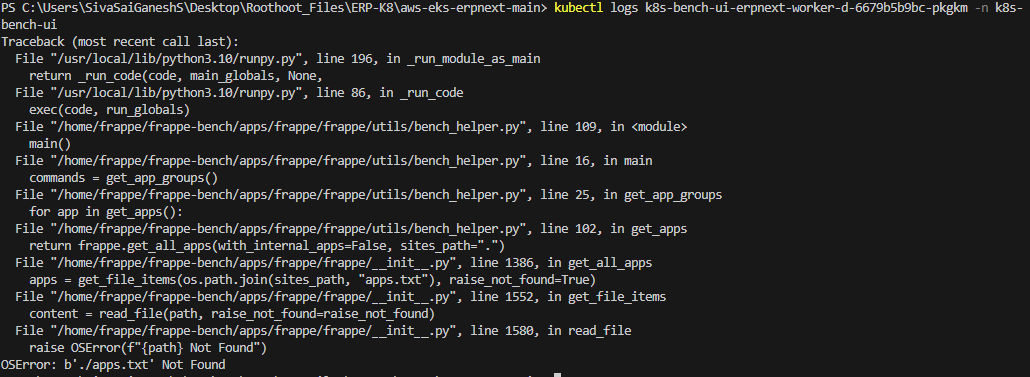

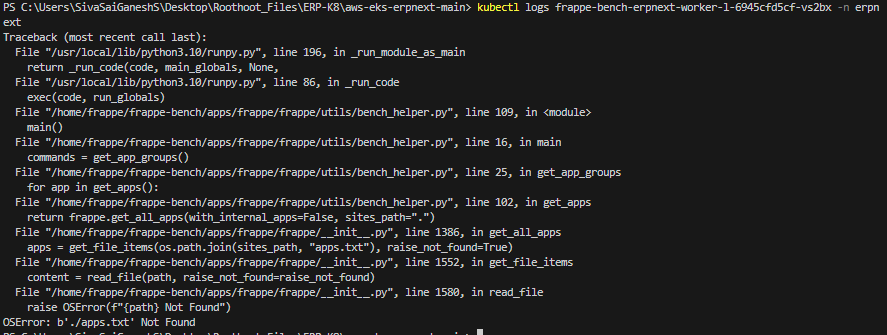

pods came but still it was application pods throughing the crashloopback error. attached SS for pod logs below. Please refer.

and also scheduler pod also same error

and i’m using this image: image: registry.gitlab.com/castlecraft/k8s_bench_interface/bench:14.2.1-0

even i have changed the image instead of 14.2.1 to latest still same error i’m facing. latest image pod logs.

PS C:\Users\SivaSaiGaneshS\Desktop\Roothoot_Files\ERP-K8\aws-eks-erpnext-main> kubectl logs frappe-bench-erpnext-worker-l-7747d7dbbf-bh8rc -n erpnext

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "/home/frappe/frappe-bench/apps/frappe/frappe/utils/bench_helper.py", line 109, in <module>

File "/home/frappe/frappe-bench/apps/frappe/frappe/utils/bench_helper.py", line 16, in main

commands = get_app_groups()

^^^^^^^^^^^^^^^^

File "/home/frappe/frappe-bench/apps/frappe/frappe/utils/bench_helper.py", line 25, in get_app_groups

for app in get_apps():

^^^^^^^^^^

File "/home/frappe/frappe-bench/apps/frappe/frappe/utils/bench_helper.py", line 102, in get_apps

return frappe.get_all_apps(with_internal_apps=False, sites_path=".")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/frappe/frappe-bench/apps/frappe/frappe/__init__.py", line 1398, in get_all_apps

apps = get_file_items(os.path.join(sites_path, "apps.txt"), raise_not_found=True)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/frappe/frappe-bench/apps/frappe/frappe/__init__.py", line 1564, in get_file_items

content = read_file(path, raise_not_found=raise_not_found)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/frappe/frappe-bench/apps/frappe/frappe/__init__.py", line 1592, in read_file

raise OSError(f"{path} Not Found")

OSError: b'./apps.txt' Not Found

socket pod logs:-

kubectl logs frappe-bench-erpnext-socketio-6795f8d59c-msd7c -n erpnext

node:events:491

throw er; // Unhandled ‘error’ event

^

Error: connect ECONNREFUSED 127.0.0.1:12311

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1278:16)

Emitted ‘error’ event on RedisClient instance at:

at RedisClient.on_error (/home/frappe/frappe-bench/apps/frappe/node_modules/redis/index.js:342:14)

at Socket. (/home/frappe/frappe-bench/apps/frappe/node_modules/redis/index.js:223:14)

at Socket.emit (node:events:513:28)

at emitErrorNT (node:internal/streams/destroy:157:8)

at emitErrorCloseNT (node:internal/streams/destroy:122:3)

at processTicksAndRejections (node:internal/process/task_queues:83:21) {

errno: -111,

code: ‘ECONNREFUSED’,

syscall: ‘connect’,

address: ‘127.0.0.1’,

port: 12311

}

it’s not the log of initContainer, initContainer is frappe-bench-ownership

ok, i tried 2 ways but still it was not working. pods has throwing errors.

Something wrong with EFS config, it is not able to access the volumes, may be permission issue. gid/uid for volume needs to be 1000:1000.

You will need to figure out on own.

Pods working at my end.

Hi Revant,

i tried with permissions(uid/gid) as well still throwing same error. pods are working fine from my end as well if i use image alone. whenever i pass args in the values.yaml file then only it was failing.

domain: https://erp.roothoot.com/

certificate was there but http working https not working

args which i’m using pasted below.

args:

- >

export start=`date +%s`;

until [[ -n `grep -hs ^ sites/common_site_config.json | jq -r ".db_host // empty"` ]] && \

[[ -n `grep -hs ^ sites/common_site_config.json | jq -r ".redis_cache // empty"` ]] && \

[[ -n `grep -hs ^ sites/common_site_config.json | jq -r ".redis_queue // empty"` ]];

do

echo "Waiting for sites/common_site_config.json to be created";

sleep 5;

if (( `date +%s`-start > 120 )); then

echo "could not find sites/common_site_config.json with required keys";

exit 1

fi

done;

for i in {1..5}; do bench --site erp.roothoot.com set-config k8s_bench_url http://manage-sites-k8s-bench.bench-system.svc.cluster.local:8000 && break || sleep 15; done;

for i in {1..5}; do bench --site erp.roothoot.com set-config k8s_bench_key admin && break || sleep 15; done;

for i in {1..5}; do bench --site erp.roothoot.com set-config k8s_bench_secret changeit && break || sleep 15; done;

What fails with these args? The site must be created before executing this job. If site doesn’t exist it can’t configure site_config.json