Erpnext uses redis as a cache. We started using it as a LRU cache, with a maxmemory set, with eviction strategy of all-keys-lru.

Maxmemory was configured in order to ensure redis doesn’t over-utilise its k8s bounds, as well as to ensure server hosting it won’t go into a swapping frenzy.

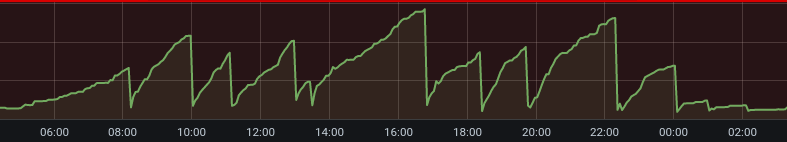

We noticed, at times, redis was slowing down sporadically. More RCA determined that slowdown occurred whenever redis utilisation was hitting defined maxmemory. Analysing a few things, we could see a correlation between slowdowns, maxmemory, and this memory usage vs time graph.

Each time the graph fell down, we experienced a slowdown.

According to eviction policy, after hitting maxmemory, any key, which is least recently used will be evicted. Seeing the graph, we could see a key with large value was being evicted (as memory usage was falling down to 20% all of a sudden). Drilling down a bit from redis events, we realised the key being evicted was bootinfo.

Now, we could establish the causation. bootinfo is a hashmap, which stores bootinfo meta, per user, with user as a hash key. However, when redis reaches maxmemory, it evicts a key, not a hashmap’s key, hence, it was dropping entire bootinfo object. This caused bootinfo cache to be dropped and reloaded for all users every time we hit max memory. This caused huge sudden surge in network input to redis, causing the slowdown.

To fix this, we decided to convert hashmaps to keys.

bootinfo: {

user1: meta1,

user2: meta2,

}

We converted this to

bootinfo|user1: meta1,

bootinfo|user2: meta2

This ensured that very small chunk of bootinfo will be evicted, not entire object.

Following the above change, memory usage vs time graph got converted to this.

As visible, entire cache memory is being fully utilised, instead of sporadic memory evictions.

Another improvement we saw was reduced network in to redis cache. As you can see from following graph, network in has been reduced to lesser than half. (Upper line is network out, lower line is network in)

(left = with hashmap, right = with concatenated keys)

Hence, a quick deduction was, using simple concatenations of keys instead of hashmaps is better and performant if redis is being used as a LRU cache.