The current implementation for these AI Agents is via OpenAI’s Assistant API.

So to answer your questions:

Does it need specific hardware? Specifications?

No, since the models run on OpenAI’s servers

Will it scan all the data available in the onsite data silo (aka “database”, files) to accomplish its functions? If so, can such a scan be restricted? Disabled? Will the AI functions then stop working or will it still be trained with the use of ERPNext (or the Framework)?

The AI can only access data for which you have created “functions” - it cannot call any other function apart from the ones you specify. On top of that, when it does the call, all user/role permissions are handled and the Agent can only access what the user can access. The Agent is not “trained” on any of your data - it just requests data to provide results.

Does the AI feature need a network connection to an external entity (organization)? If so, does Raven now need that network connection to function? If so, will it transmit onsite data to that entity?

Yes, since it makes a call to OpenAI.

How can this data leakage be prohibited and verified? (Is there a point were such perimeter checks on the data can be accomplished reliably and securely, and how? Is this duly documented?)

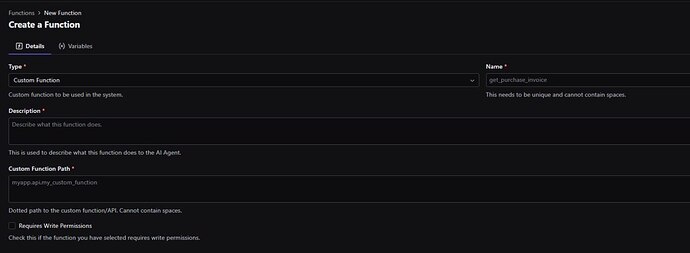

The AI Agent can access standard functions like getting a document, or creating a document - based on what you specify when configuring it. All these functions call standard functions in Frappe that have user permissions checks - refer to: raven/raven/ai/functions.py at develop · The-Commit-Company/raven · GitHub

For custom function calls like APIs, Raven does not allow you to use any internal function. So you can only create functions that point to whitelisted APIs in apps and hence user permissions should be handled.

Can any AI feature be disabled if any doubts about the integrity of the onsite data due to its functioning or lack of information about its functioning persist? Can this be done easily (and, if needed, urgently) if new information about it compromising the site’s data appear?

Yes, the integration can be disabled with just a checkbox in settings. Moreover, OpenAI is not called without explicit user request via a direct message to an agent on Raven. Nothing runs in the background automatically.

Hope that clears things up.