Hello Everyone,

I want to express my gratitude for all the support I’ve received. I’m thrilled to share that I successfully set up my ERPNext application using MicroK8s. Special thanks to @Peer and @revant_one for their invaluable assistance. Through this process, I’ve gained a lot of knowledge about Kubernetes resources. I’ve documented the steps I followed to deploy my ERPNext application on a local Ubuntu server using MicroK8s, and I hope this can be helpful to anyone who might need it.

Step:

microk8s start

microk8s status

microk8s enable dns hostpath-storage ingress

~/kube-poc$ microk8s kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress nginx-ingress-microk8s-controller-j72zk 1/1 Running 1 (99s ago) 3h55m

kube-system calico-kube-controllers-796fb75cc-b7s6k 1/1 Running 9 (99s ago) 3d1h

kube-system calico-node-wr7tq 1/1 Running 9 (99s ago) 3d1h

kube-system coredns-5986966c54-z2chp 1/1 Running 9 (99s ago) 3d1h

kube-system dashboard-metrics-scraper-795895d745-n64mz 1/1 Running 9 (99s ago) 3d1h

kube-system hostpath-provisioner-7c8bdf94b8-5zcbt 1/1 Running 1 (99s ago) 4h1m

kube-system kubernetes-dashboard-6796797fb5-7ml5q 1/1 Running 9 (99s ago) 3d1h

kube-system metrics-server-7cff7889bd-4f5bh 1/1 Running 9 (99s ago) 3d1h

~/kube-poc$ microk8s kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

microk8s-hostpath (default) microk8s.io/hostpath Delete WaitForFirstConsumer false 4h2m

Create a folder at a path /mnt/data/hostpath in your local fs.

kube-pvc.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: hostpath-pv

spec:

storageClassName: microk8s-hostpath

capacity:

storage: 8Gi

accessModes:

- ReadWriteMany

hostPath:

path: /mnt/data/hostpath

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: hostpath-pvc

spec:

storageClassName: microk8s-hostpath

accessModes:

- ReadWriteMany

resources:

requests:

storage: 8Gi

volumeName: hostpath-pv

~/kube-poc$ microk8s kubectl apply -f kube-pvc.yaml

~/kube-poc$ microk8s kubectl get pvc -A

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

default hostpath-pvc Bound hostpath-pv 8Gi RWX microk8s-hostpath <unset> 12h

~/kube-poc$ microk8s kubectl get pv -A

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

hostpath-pv 8Gi RWX Retain Bound default/hostpath-pvc microk8s-hostpath <unset> 12h

~/kube-poc$ microk8s kubectl create namespace erpnext

namespace/erpnext created

microk8s helm repo add frappe https://helm.erpnext.com

custom-values.yaml (copied from https://github.com/frappe/helm/blob/main/erpnext/values.yaml)

# Default values for erpnext.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

# Configure external database host

# dbHost: ""

# dbPort: 3306

# dbRootUser: ""

# dbRootPassword: ""

# dbRds: false

image:

repository: frappe/erpnext

tag: v15.38.4

pullPolicy: IfNotPresent

nginx:

replicaCount: 1

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 3

targetCPU: 75

targetMemory: 75

# config: |

# # custom conf /etc/nginx/conf.d/default.conf

environment:

upstreamRealIPAddress: "127.0.0.1"

upstreamRealIPRecursive: "off"

upstreamRealIPHeader: "X-Forwarded-For"

frappeSiteNameHeader: "$host"

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

readinessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

service:

type: ClusterIP

port: 8080

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

# Custom topologySpreadConstraints (uncomment and modify to override defaults)

# topologySpreadConstraints:

# - maxSkew: 2

# topologyKey: failure-domain.beta.kubernetes.io/zone

# whenUnsatisfiable: ScheduleAnyway

# Default topologySpreadConstraints (used if topologySpreadConstraints is not set)

defaultTopologySpread:

maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: DoNotSchedule

envVars: []

initContainers: []

sidecars: []

worker:

gunicorn:

replicaCount: 1

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 3

targetCPU: 75

targetMemory: 75

livenessProbe:

tcpSocket:

port: 8000

initialDelaySeconds: 5

periodSeconds: 10

readinessProbe:

tcpSocket:

port: 8000

initialDelaySeconds: 5

periodSeconds: 10

service:

type: ClusterIP

port: 8000

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

args: []

envVars: []

initContainers: []

sidecars: []

default:

replicaCount: 1

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 3

targetCPU: 75

targetMemory: 75

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

livenessProbe:

override: false

probe: {}

readinessProbe:

override: false

probe: {}

envVars: []

initContainers: []

sidecars: []

short:

replicaCount: 1

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 3

targetCPU: 75

targetMemory: 75

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

livenessProbe:

override: false

probe: {}

readinessProbe:

override: false

probe: {}

envVars: []

initContainers: []

sidecars: []

long:

replicaCount: 1

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 3

targetCPU: 75

targetMemory: 75

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

livenessProbe:

override: false

probe: {}

readinessProbe:

override: false

probe: {}

envVars: []

initContainers: []

sidecars: []

scheduler:

replicaCount: 1

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

livenessProbe:

override: false

probe: {}

readinessProbe:

override: false

probe: {}

envVars: []

initContainers: []

sidecars: []

# Custom topologySpreadConstraints (uncomment and modify to override defaults)

# topologySpreadConstraints:

# - maxSkew: 2

# topologyKey: failure-domain.beta.kubernetes.io/zone

# whenUnsatisfiable: ScheduleAnyway

# Default topologySpreadConstraints (used if topologySpreadConstraints is not set)

defaultTopologySpread:

maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: DoNotSchedule

healthProbe: |

exec:

command:

- bash

- -c

- echo "Ping backing services";

{{- if .Values.mariadb.enabled }}

{{- if eq .Values.mariadb.architecture "replication" }}

- wait-for-it {{ .Release.Name }}-mariadb-primary:{{ .Values.mariadb.primary.service.ports.mysql }} -t 1;

{{- else }}

- wait-for-it {{ .Release.Name }}-mariadb:{{ .Values.mariadb.primary.service.ports.mysql }} -t 1;

{{- end }}

{{- else if .Values.dbHost }}

- wait-for-it {{ .Values.dbHost }}:{{ .Values.mariadb.primary.service.ports.mysql }} -t 1;

{{- end }}

{{- if index .Values "redis-cache" "host" }}

- wait-for-it {{ .Release.Name }}-redis-cache-master:{{ index .Values "redis-cache" "master" "containerPorts" "redis" }} -t 1;

{{- else if index .Values "redis-cache" "host" }}

- wait-for-it {{ index .Values "redis-cache" "host" }} -t 1;

{{- end }}

{{- if index .Values "redis-queue" "host" }}

- wait-for-it {{ .Release.Name }}-redis-queue-master:{{ index .Values "redis-queue" "master" "containerPorts" "redis" }} -t 1;

{{- else if index .Values "redis-queue" "host" }}

- wait-for-it {{ index .Values "redis-queue" "host" }} -t 1;

{{- end }}

{{- if .Values.postgresql.host }}

- wait-for-it {{ .Values.postgresql.host }}:{{ .Values.postgresql.primary.service.ports.postgresql }} -t 1;

{{- else if .Values.postgresql.enabled }}

- wait-for-it {{ .Release.Name }}-postgresql:{{ .Values.postgresql.primary.service.ports.postgresql }} -t 1;

{{- end }}

initialDelaySeconds: 15

periodSeconds: 5

socketio:

replicaCount: 1

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 3

targetCPU: 75

targetMemory: 75

livenessProbe:

tcpSocket:

port: 9000

initialDelaySeconds: 5

periodSeconds: 10

readinessProbe:

tcpSocket:

port: 9000

initialDelaySeconds: 5

periodSeconds: 10

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

service:

type: ClusterIP

port: 9000

envVars: []

initContainers: []

sidecars: []

persistence:

worker:

enabled: true

# existingClaim: ""

size: 6Gi

storageClass: "microk8s-hostpath"

logs:

# Container based log search and analytics stack recommended

enabled: false

# existingClaim: ""

size: 8Gi

# storageClass: "nfs"

# Ingress

ingress:

# ingressName: ""

# className: ""

enabled: false

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

# cert-manager.io/cluster-issuer: letsencrypt-prod

hosts:

- host: erp.cluster.local

paths:

- path: /

pathType: ImplementationSpecific

tls: []

# - secretName: auth-server-tls

# hosts:

# - auth-server.local

jobs:

volumePermissions:

enabled: false

backoffLimit: 0

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

configure:

enabled: true

fixVolume: true

backoffLimit: 0

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

envVars: []

command: []

args: []

createSite:

enabled: false

forceCreate: false

siteName: "erp.cluster.local"

adminPassword: "changeit"

installApps:

- "erpnext"

dbType: "mariadb"

backoffLimit: 0

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

dropSite:

enabled: false

forced: false

siteName: "erp.cluster.local"

backoffLimit: 0

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

backup:

enabled: false

siteName: "erp.cluster.local"

withFiles: true

backoffLimit: 0

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

migrate:

enabled: false

siteName: "erp.cluster.local"

skipFailing: false

backoffLimit: 0

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

custom:

enabled: false

jobName: ""

labels: {}

backoffLimit: 0

initContainers: []

containers: []

restartPolicy: Never

volumes: []

nodeSelector: {}

affinity: {}

tolerations: []

imagePullSecrets: []

nameOverride: ""

fullnameOverride: ""

serviceAccount:

# Specifies whether a service account should be created

create: true

podSecurityContext:

supplementalGroups: [1000]

securityContext:

capabilities:

add:

- CAP_CHOWN

# readOnlyRootFilesystem: true

# runAsNonRoot: true

# runAsUser: 1000

redis-cache:

# https://github.com/bitnami/charts/tree/master/bitnami/redis

enabled: true

# host: ""

architecture: standalone

auth:

enabled: false

sentinal: false

master:

containerPorts:

redis: 6379

persistence:

enabled: false

redis-queue:

# https://github.com/bitnami/charts/tree/master/bitnami/redis

enabled: true

# host: ""

architecture: standalone

auth:

enabled: false

sentinal: false

master:

containerPorts:

redis: 6379

persistence:

enabled: false

mariadb:

# https://github.com/bitnami/charts/tree/master/bitnami/mariadb

enabled: true

auth:

rootPassword: "changeit"

username: "erpnext"

password: "changeit"

replicationPassword: "changeit"

primary:

service:

ports:

mysql: 3306

extraFlags: >-

--skip-character-set-client-handshake

--skip-innodb-read-only-compressed

--character-set-server=utf8mb4

--collation-server=utf8mb4_unicode_ci

postgresql:

# https://github.com/bitnami/charts/tree/master/bitnami/postgresql

enabled: false

# host: ""

auth:

username: "postgres"

postgresPassword: "changeit"

primary:

service:

ports:

postgresql: 5432

~/kube-poc$ microk8s helm install frappe-bench -n erpnext -f custom-values.yaml frappe/erpnext

NAME: frappe-bench

LAST DEPLOYED: Tue Oct 22 01:45:46 2024

NAMESPACE: erpnext

STATUS: deployed

REVISION: 1

NOTES:

Frappe/ERPNext Release deployed

Release Name: frappe-bench-erpnext

Wait for the pods to start.

To create sites and other resources, refer:

https://github.com/frappe/helm/blob/main/erpnext/README.md

Frequently Asked Questions:

https://helm.erpnext.com/faq

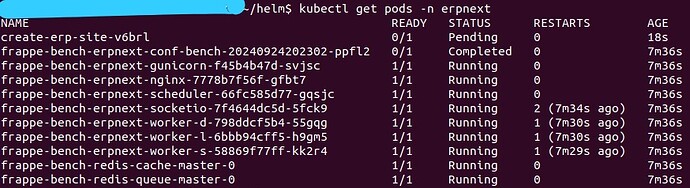

~/kube-poc$ microk8s kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

erpnext frappe-bench-erpnext-conf-bench-20241022014932-zsb2b 0/1 Completed 0 40s

erpnext frappe-bench-erpnext-gunicorn-7fdcf996ff-9mtnh 1/1 Running 0 40s

erpnext frappe-bench-erpnext-nginx-5d6ff6bfd-m8h4k 1/1 Running 0 40s

erpnext frappe-bench-erpnext-scheduler-7f5b6f7954-5qmp5 1/1 Running 1 (32s ago) 40s

erpnext frappe-bench-erpnext-socketio-579b548d7c-252w9 1/1 Running 2 (31s ago) 40s

erpnext frappe-bench-erpnext-worker-d-5fb4d5b4b7-h9l6d 0/1 Running 2 (27s ago) 40s

erpnext frappe-bench-erpnext-worker-l-5754c45c46-xs6k9 0/1 Running 2 (27s ago) 40s

erpnext frappe-bench-erpnext-worker-s-8d9687848-8qmdq 0/1 Running 2 (27s ago) 40s

erpnext frappe-bench-mariadb-0 1/1 Running 0 40s

erpnext frappe-bench-redis-cache-master-0 1/1 Running 0 40s

erpnext frappe-bench-redis-queue-master-0 1/1 Running 0 40s

ingress nginx-ingress-microk8s-controller-j72zk 1/1 Running 1 (12m ago) 4h5m

kube-system calico-kube-controllers-796fb75cc-b7s6k 1/1 Running 9 (12m ago) 3d2h

kube-system calico-node-wr7tq 1/1 Running 9 (12m ago) 3d2h

kube-system coredns-5986966c54-z2chp 1/1 Running 9 (12m ago) 3d2h

kube-system dashboard-metrics-scraper-795895d745-n64mz 1/1 Running 9 (12m ago) 3d1h

kube-system hostpath-provisioner-7c8bdf94b8-5zcbt 1/1 Running 1 (12m ago) 4h12m

kube-system kubernetes-dashboard-6796797fb5-7ml5q 1/1 Running 9 (12m ago) 3d1h

kube-system metrics-server-7cff7889bd-4f5bh 1/1 Running 9 (12m ago) 3d1h

~/kube-poc$ microk8s kubectl get pvc -A

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

default hostpath-pvc Bound hostpath-pv 8Gi RWX microk8s-hostpath <unset> 3h55m

erpnext data-frappe-bench-mariadb-0 Bound pvc-f5d3d2df-1f30-4108-a2fb-a3d480c317f4 8Gi RWO microk8s-hostpath <unset> 7m50s

erpnext frappe-bench-erpnext Bound pvc-5437ff12-1d6d-4db7-9b37-65162f1652c1 6Gi RWX microk8s-hostpath <unset> 4m4s

create-new-site-job.yaml

---

# Source: erpnext/templates/job-create-site.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: frappe-bench-erpnext-new-site-20241022020025

labels:

helm.sh/chart: erpnext-7.0.122

app.kubernetes.io/name: erpnext

app.kubernetes.io/instance: frappe-bench

app.kubernetes.io/version: "v15.38.2"

app.kubernetes.io/managed-by: Helm

annotations:

spec:

backoffLimit: 0

template:

spec:

serviceAccountName: frappe-bench-erpnext

securityContext:

supplementalGroups:

- 1000

initContainers:

- name: validate-config

image: "frappe/erpnext:v15.38.4"

imagePullPolicy: IfNotPresent

command: ["bash", "-c"]

args:

- >

export start=`date +%s`;

until [[ -n `grep -hs ^ sites/common_site_config.json | jq -r ".db_host // empty"` ]] && \

[[ -n `grep -hs ^ sites/common_site_config.json | jq -r ".redis_cache // empty"` ]] && \

[[ -n `grep -hs ^ sites/common_site_config.json | jq -r ".redis_queue // empty"` ]];

do

echo "Waiting for sites/common_site_config.json to be created";

sleep 5;

if (( `date +%s`-start > 600 )); then

echo "could not find sites/common_site_config.json with required keys";

exit 1

fi

done;

echo "sites/common_site_config.json found";

echo "Waiting for database to be reachable...";

wait-for-it -t 180 $(DB_HOST):$(DB_PORT);

echo "Database is reachable.";

env:

- name: "DB_HOST"

value: frappe-bench-mariadb

- name: "DB_PORT"

value: "3306"

resources:

{}

securityContext:

capabilities:

add:

- CAP_CHOWN

volumeMounts:

- name: sites-dir

mountPath: /home/frappe/frappe-bench/sites

containers:

- name: create-site

image: "frappe/erpnext:v15.38.4"

imagePullPolicy: IfNotPresent

command: ["bash", "-c"]

args:

- >

set -x;

bench_output=$(bench new-site ${SITE_NAME} \

--no-mariadb-socket \

--db-type=${DB_TYPE} \

--db-host=${DB_HOST} \

--db-port=${DB_PORT} \

--admin-password=${ADMIN_PASSWORD} \

--mariadb-root-username=${DB_ROOT_USER} \

--mariadb-root-password=${DB_ROOT_PASSWORD} \

--install-app=erpnext \

--force \

| tee /dev/stderr);

bench_exit_status=$?;

if [ $bench_exit_status -ne 0 ]; then

# Don't consider the case "site already exists" an error.

if [[ $bench_output == *"already exists"* ]]; then

echo "Site already exists, continuing...";

else

echo "An error occurred in bench new-site: $bench_output"

exit $bench_exit_status;

fi

fi

set -e;

rm -f currentsite.txt

env:

- name: "SITE_NAME"

value: "localhost"

- name: "DB_TYPE"

value: mariadb

- name: "DB_HOST"

value: frappe-bench-mariadb

- name: "DB_PORT"

value: "3306"

- name: "DB_ROOT_USER"

value: "root"

- name: "DB_ROOT_PASSWORD"

valueFrom:

secretKeyRef:

key: mariadb-root-password

name: frappe-bench-mariadb

- name: "ADMIN_PASSWORD"

value: "changeit"

resources:

{}

securityContext:

capabilities:

add:

- CAP_CHOWN

volumeMounts:

- name: sites-dir

mountPath: /home/frappe/frappe-bench/sites

- name: logs

mountPath: /home/frappe/frappe-bench/logs

restartPolicy: Never

volumes:

- name: sites-dir

persistentVolumeClaim:

claimName: frappe-bench-erpnext

readOnly: false

- name: logs

emptyDir: {}

~/kube-poc$ microk8s kubectl apply -n erpnext -f create-new-site-job.yaml

job.batch/frappe-bench-erpnext-new-site-20241022020025 created

~/kube-poc$ microk8s kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

erpnext frappe-bench-erpnext-conf-bench-20241022014932-zsb2b 0/1 Completed 0 12m

erpnext frappe-bench-erpnext-gunicorn-7fdcf996ff-9mtnh 1/1 Running 0 12m

erpnext frappe-bench-erpnext-new-site-20241022020025-6w2pp 1/1 Running 0 18s

erpnext frappe-bench-erpnext-nginx-5d6ff6bfd-m8h4k 1/1 Running 0 12m

erpnext frappe-bench-erpnext-scheduler-7f5b6f7954-5qmp5 1/1 Running 1 (11m ago) 12m

erpnext frappe-bench-erpnext-socketio-579b548d7c-252w9 1/1 Running 2 (11m ago) 12m

erpnext frappe-bench-erpnext-worker-d-5fb4d5b4b7-h9l6d 1/1 Running 2 (11m ago) 12m

erpnext frappe-bench-erpnext-worker-l-5754c45c46-xs6k9 1/1 Running 2 (11m ago) 12m

erpnext frappe-bench-erpnext-worker-s-8d9687848-8qmdq 1/1 Running 2 (11m ago) 12m

erpnext frappe-bench-mariadb-0 1/1 Running 0 12m

erpnext frappe-bench-redis-cache-master-0 1/1 Running 0 12m

erpnext frappe-bench-redis-queue-master-0 1/1 Running 0 12m

ingress nginx-ingress-microk8s-controller-j72zk 1/1 Running 1 (23m ago) 4h17m

kube-system calico-kube-controllers-796fb75cc-b7s6k 1/1 Running 9 (23m ago) 3d2h

kube-system calico-node-wr7tq 1/1 Running 9 (23m ago) 3d2h

kube-system coredns-5986966c54-z2chp 1/1 Running 9 (23m ago) 3d2h

kube-system dashboard-metrics-scraper-795895d745-n64mz 1/1 Running 9 (23m ago) 3d1h

kube-system hostpath-provisioner-7c8bdf94b8-5zcbt 1/1 Running 1 (23m ago) 4h23m

kube-system kubernetes-dashboard-6796797fb5-7ml5q 1/1 Running 9 (23m ago) 3d1h

kube-system metrics-server-7cff7889bd-4f5bh 1/1 Running 9 (23m ago) 3d1h

~/kube-poc$ microk8s kubectl logs frappe-bench-erpnext-new-site-20241022020025-6w2pp -n erpnext

Defaulted container "create-site" out of: create-site, validate-config (init)

++ bench new-site localhost --no-mariadb-socket --db-type=mariadb --db-host=frappe-bench-mariadb --db-port=3306 --admin-password=changeit --mariadb-root-username=root --mariadb-root-password=changeit --install-app=erpnext --force

++ tee /dev/stderr

Installing frappe...

Updating DocTypes for frappe : [========================================] 100%

Updating Dashboard for frappe

Installing erpnext...

Updating DocTypes for erpnext : [========================================] 100%

Updating customizations for Address

Updating customizations for Contact

Updating Dashboard for erpnext

*** Scheduler is disabled ***

+ bench_output='

Installing frappe...

Updating DocTypes for frappe : [========================================] 100%

Updating Dashboard for frappe

Installing erpnext...

Updating DocTypes for erpnext : [========================================] 100%

Updating customizations for Address

Updating customizations for Contact

Updating Dashboard for erpnext

*** Scheduler is disabled ***'

+ bench_exit_status=0

+ '[' 0 -ne 0 ']'

+ set -e

+ rm -f currentsite.txt

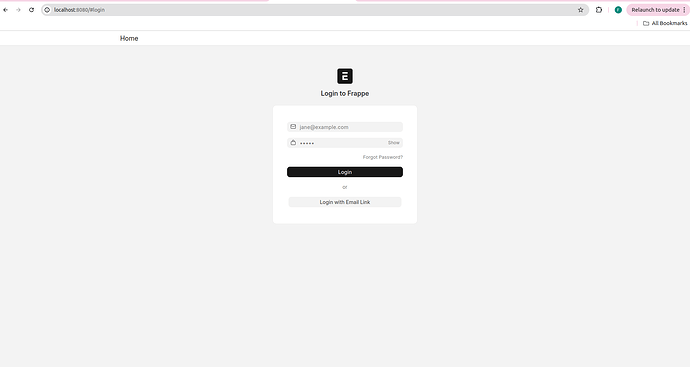

~/kube-poc$ microk8s kubectl port-forward -n erpnext svc/frappe-bench-erpnext 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

Now you can open the site using ( localhost:8080) in your browser. Since we used ingress addon in microk8s it will take care of loadbalancing.